What is a P value?

A P value is a statistical measure that helps to determine the likelihood that the difference observed between two groups is due to chance. In other words, it tells you the probability that the difference you observed between two groups occurred by random sampling error, rather than being a true difference between the groups.

For instance, if you conducted an experiment with two groups, A and B, and found that group A had a significantly higher mean than group B, the P value would tell you the likelihood of this difference being due to chance. If the P value is low (typically below 0.05), it indicates that the observed difference is unlikely to have occurred by chance alone, and you can conclude that there is likely a real difference between the two groups. Conversely, if the P value is high (typically above 0.05), it suggests that the observed difference could have occurred by chance, and you cannot conclude that there is a real difference between the two groups.

What is a null hypothesis?

In statistical discussions of P values, the concept of a null hypothesis is commonly used. The null hypothesis assumes that there is no significant difference between the groups being compared. In this context, the P value can be defined as the probability of obtaining a difference in the sample means as large as, or larger than, the observed difference, if the null hypothesis were actually true.

Common misinterpretation of a P value

A common misunderstanding of P values can lead to incorrect conclusions. For example, if the P value is reported as 0.03, it is often mistakenly assumed that there is a 97% chance that the observed difference is a real difference between two populations, and only a 3% chance that it is due to chance alone. However, this interpretation is incorrect.

What the P value actually means is that if the null hypothesis (that there is no significant difference between the populations) is true, there is a 3% chance of obtaining a difference as large as, or larger than, the observed difference. Therefore, the correct interpretation of the P value is that random sampling from identical populations would result in a difference smaller than the observed difference in 97% of experiments and larger than the observed difference in 3% of experiments. It does not provide evidence for the existence of a real difference between the populations.

One-tail vs. two-tail P values

In comparing two groups, it is important to differentiate between one-tail and two-tail P values, which are both based on the same null hypothesis that the two populations are identical, and any observed difference is due to chance.

A two-tail P value determines the likelihood of randomly selecting samples that have means as far apart or further than what was observed in the experiment, with either group having the larger mean, assuming the null hypothesis is true.

On the other hand, a one-tail P value requires the prediction of which group will have the larger mean before data collection. It determines the likelihood of randomly selecting samples with means as far apart or further than what was observed in the experiment, with the specified group having the larger mean, assuming the null hypothesis is true. A one-tail P value should only be used when previous data or physical limitations suggest that a difference, if any, can only occur in one direction.

However, it is usually better to use a two-tail P value for several reasons. The relationship between P values and confidence intervals is easier to understand with two-tail P values. Some tests involve three or more groups, making the concept of tails irrelevant, and a two-tail P value is consistent with the P values reported by these tests.

Choosing a one-tail P value can lead to a dilemma if the observed difference is in the opposite direction of the experimental hypothesis. To be rigorous, one must conclude that the difference is due to chance, even if the difference is significant. Therefore, it is advisable to always use two-tail P values to avoid this situation.

Hypothesis testing and statistical significance

Statistical hypothesis testing

Statistical hypothesis testing is a method used to make decisions based on data. It is commonly used to determine whether there is a significant difference between two groups. The process involves setting a threshold P value before conducting the experiment, which is usually set to 0.05.

The null hypothesis is defined as the assumption that there is no difference between the two groups being compared. The alternative hypothesis is the opposite of the null hypothesis and represents the hypothesis being tested.

Once the null and alternative hypotheses are defined, a statistical test is performed to calculate the P value. If the P value is less than the threshold value, it is concluded that the null hypothesis is rejected, and the difference between the two groups is statistically significant. If the P value is greater than the threshold value, the null hypothesis cannot be rejected, and it is concluded that there is no significant difference between the two groups.

It is important to note that failing to reject the null hypothesis does not necessarily mean that the null hypothesis is true. It simply means that there is not enough evidence to reject it. Hypothesis testing should be used in conjunction with other statistical methods to draw conclusions about the data.

Statistical significance in science

The term “significant” is often misleading and can be misinterpreted. In statistical analysis, a result is considered statistically significant if it would occur less than 5% of the time assuming that the populations being compared are identical (using a threshold of alpha=0.05). However, just because a result is statistically significant does not necessarily mean that it is biologically or clinically meaningful or noteworthy. Similarly, a result that is not statistically significant in one experiment may still be important.

If a result is statistically significant, there are two possible explanations:

- The populations are identical, and any observed difference is due to chance. This is called a Type I error, and it occurs when a statistically significant result is obtained even though there is no actual difference between the populations being compared. If a significance level of P<0.05 is used, then this error will occur in approximately 5% of experiments where there is no difference.

- The populations are truly different, and the observed difference is not due to chance. This difference could be significant in the scientific context, or it could be trivial.

It is important to keep in mind that statistical significance does not necessarily imply biological or clinical significance, and that further investigation may be required to fully understand the implications of a particular result.

“Extremely significant” results

In strict statistical terms, it is incorrect to think that P=0.0001 is more significant than P=0.04. Once a threshold P value for statistical significance is established, each result is categorized as either statistically significant or not statistically significant. There are no degrees of statistical significance. Some statisticians strongly adhere to this definition.

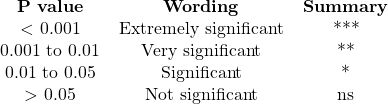

However, many scientists are less rigid and describe results as “almost significant,” “very significant,” or “extremely significant.” Prism software summarizes P values using words in the middle column of a table, and some scientists use symbols from the third column to label graphs. These definitions are not entirely standardized, so if you choose to report results in this way, you should provide a clear definition of the symbols in your figure legend.

Report the actual P value

The concept of statistical hypothesis testing is useful in quality control, where a definite accept/reject decision must be made based on a single analysis. However, experimental science is more complex, and decisions should not be based solely on a significant/not significant threshold. Instead, it is important to report exact P values, which can be interpreted in the context of all relevant experiments and analyses.

The tradition of reporting only whether the P value is less than or greater than 0.05 was necessitated by the lack of easy access to computers and the need for statistical tables. However, with the widespread availability of computing power, it is now simple to calculate exact P values and there is no need to limit reporting to a threshold value.